Stable Diffusion Seeds

Introduction

In the realm of AI-generated images, the concept of Seeds holds an enigmatic power, often surrounded by confusion and misinformation. This guide aims to unravel the mysteries behind Stable Diffusion Seeds, providing clarity on what they are, how they function, and, most importantly, how you can harness their potential to fine-tune your generated images.

Stable Diffusion Seeds

Understanding the Essence of Stable Diffusion Seeds

A Seed, in the context of Stable Diffusion, is simply a numerical value from which the algorithm generates noise. Contrary to misconceptions, a Seed is not the noise image itself, nor does it encapsulate all parameters used in image generation.

It remains detached from specific text prompts, poses, clothing, backgrounds, or artistic styles. Stable Diffusion’s noise generator is not entirely random; it reliably reproduces a noise pattern from a given Seed, laying the foundation for repeatable image generation.

Demystifying Stable Diffusion’s Process

To grasp the intricacies of Stable Diffusion’s image generation, consider the algorithm’s ability to consistently reproduce a pattern of noise from a Seed. The resulting images may seem random to human eyes, but they follow a discernible structure.

For a deeper dive into this process, let’s explore examples demonstrating how Stable Diffusion transforms initial noise into compelling images. The repeatability inherent in Stable Diffusion introduces myriad potential use cases, empowering users to:

- Reproduce images across sessions with the same Seed, prompt, and parameters.

- Collaborate by working from someone else’s Seed, prompt, and parameters.

- Make subtle tweaks by adjusting the prompt or parameters without altering the overall image composition significantly.

Exploring the Influence of Stable Diffusion Seeds and Prompts

Delving into detailed experiments conducted by the Stable Diffusion community, certain Seeds exhibit a higher probability of producing images with specific characteristics. Users have identified Seeds associated with distinct colour palettes or compositions, providing a strategic advantage in obtaining desired outputs.

/u/wonderflex’s experiments reveal intriguing insights:

- Changing the Seed while keeping the prompt and parameters constant results in markedly different output images.

- Modifying the text prompt, even with a single word, can alter output images without drastically changing their general look or colour palette.

Navigating the Stable Diffusion Web UI Seed Field

Seeds wield conceptual power, and leveraging them involves understanding the Stable Diffusion Web UI. In both the text2img and img2img tabs, you’ll encounter a field labelled Seed. Key considerations include:

- The default setting is -1, prompting Stable Diffusion to pull a random Seed for image generation.

- You can manually input a specific Seed number into the field.

- The dice button resets the Seed to -1, while the green recycle button populates it with the Seed used in your generated image.

Maintaining Consistency with Stable Diffusion Seeds

Suppose you’ve generated a batch of images and wish to retain their overall look while making minor modifications. The process involves populating the Seed field with the desired Seed from a selected image, ensuring consistency in the generated images.

Consider the following steps:

- Select the generated image.

- Hit the green recycle button to populate the Seed field.

- Make necessary tweaks to the text prompt or parameters while preserving the Seed for consistency.

Modifying Images with Precision

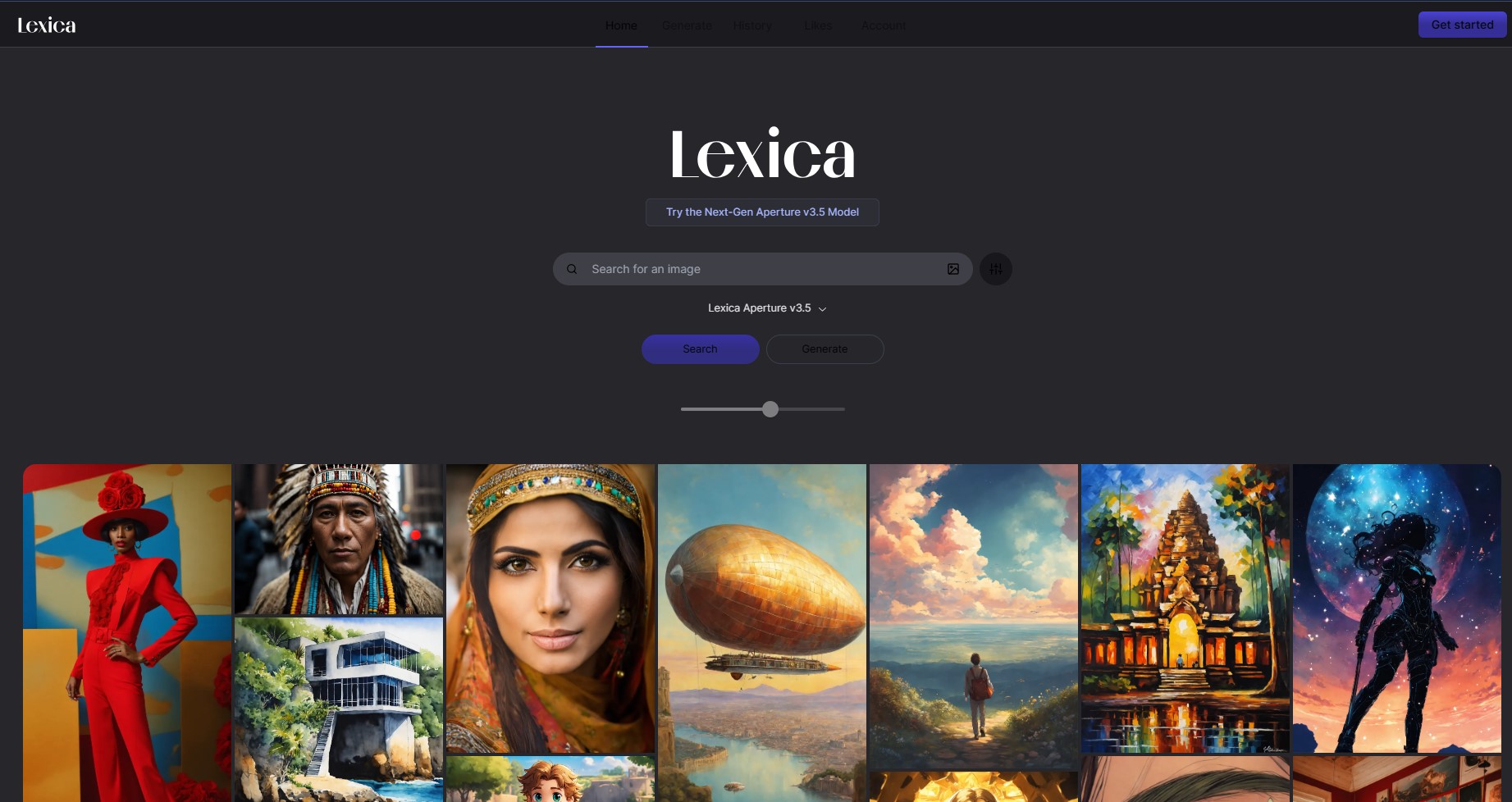

When it comes to precise modifications, starting with a specific Seed is crucial. Explore freely available AI-generated images and their associated Seeds through platforms like Lexica. By selecting a Seed known for specific characteristics, you can fine-tune your generated images with precision.

How to Find Seeds

To discover Seeds linked to desired characteristics, leverage the vast repository of AI-generated images available in Stable Diffusion communities. Lexica proves to be an efficient tool for sorting through images and finding associated Seeds.

By filtering results to Stable Diffusion 1.5, you gain access to valuable information, including the text prompt, parameters, and the coveted Seed number.

Time Needed : 00 hours 05 minutes

In this tutorial, we’ll guide you through the process of finding Stable Diffusion Seeds such that you can use them for generating images with Stable Diffusion. This might take about 5 minutes, so grab a cup of coffee and let’s get started!

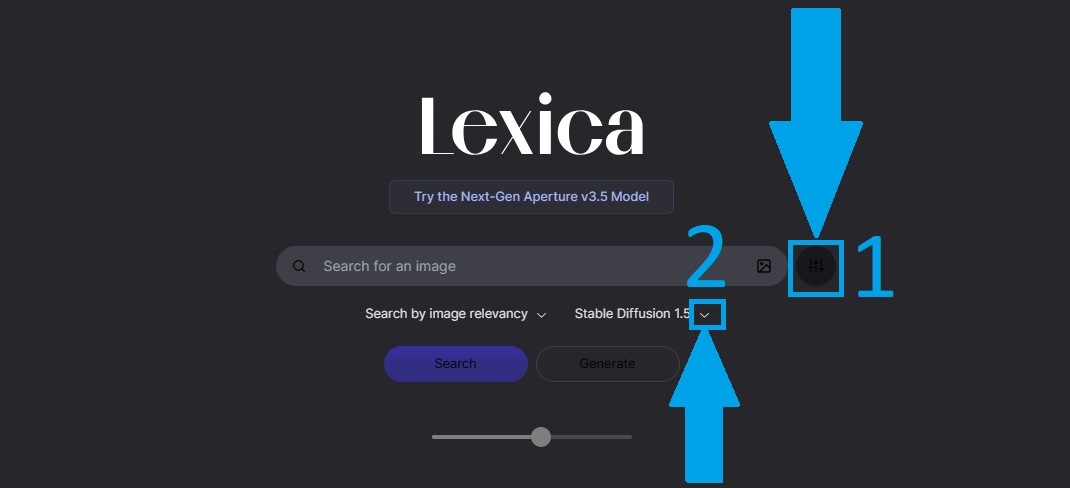

Step 1: Visit Lexica

Go the Lexica’s website using the following URL: Lexica as shown in the image above.

Go the Lexica’s website using the following URL: Lexica as shown in the image above.Step 2: Select Correct Filter

As seen in the image in step 1, the filter is set by default to Lexica Aperture V3.5 (which could be a different version if you are reading this long after this article was published). As shown in the image above, Switch to select the Stable Diffusion 1.5 in the search filter.

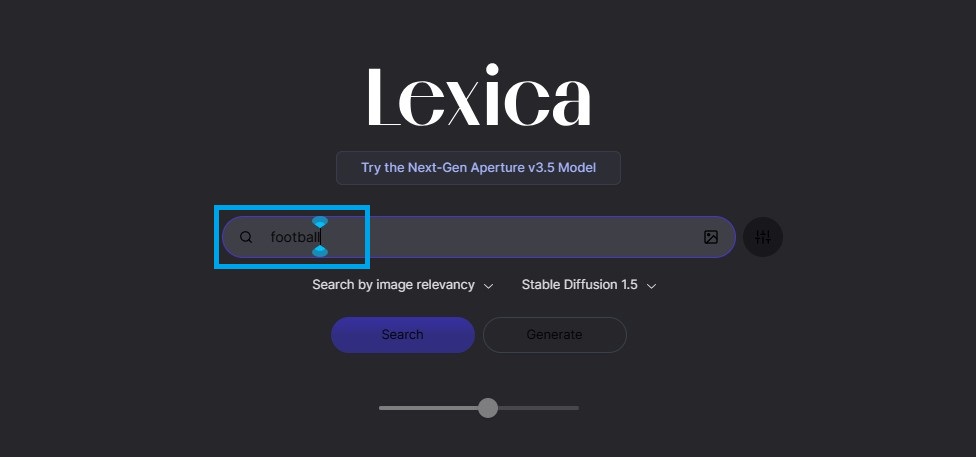

As seen in the image in step 1, the filter is set by default to Lexica Aperture V3.5 (which could be a different version if you are reading this long after this article was published). As shown in the image above, Switch to select the Stable Diffusion 1.5 in the search filter.Step 3: Search for An AI-generated Image

Search for an AI-generated image, by typing a description for the desired image and hitting the search button, as shown in the image above.

Search for an AI-generated image, by typing a description for the desired image and hitting the search button, as shown in the image above.Step 4: View Image Stable Diffusion Seed

Once the images show up, click on any of the results to see the image’s Seed, as shown in the image above.

Once the images show up, click on any of the results to see the image’s Seed, as shown in the image above.

Armed with these tools and insights, you’re well-equipped to embark on a journey of creative exploration within the Stable Diffusion ecosystem. Best of luck and enjoy the fascinating world of AI-generated images!

Conclusion

In conclusion, understanding and effectively using Stable Diffusion Seeds opens up a realm of possibilities for consistent, controlled, and personalized image generation. Whether replicating others’ work or seeking a reliable starting point, mastering the art of Seeds in the Stable Diffusion Web UI empowers you to unleash the full potential of AI-generated images.

Frequently Asked Questions and Their Answers

What is a seed in Stable Diffusion?

A seed in Stable Diffusion is a numerical value used to generate noise, a fundamental component in the AI image generation process. It does not represent the image itself but influences the algorithm’s ability to consistently reproduce patterns of noise from the given seed.

How does changing the seed impact image generation?

Changing the seed while keeping the prompt and parameters constant results in different-looking output images. This variation allows users to explore diverse visual outcomes while maintaining consistency in other aspects of the generated images.

Where can I find specific seeds for desired image characteristics?

Users can explore freely available AI-generated images in Stable Diffusion communities, particularly through platforms like Lexica. By filtering results to Stable Diffusion 1.5, users can discover associated seeds along with text prompts and parameters.

How can I use seeds to modify generated images with precision?

To modify generated images with precision, users can start with a specific seed known for desired characteristics. By inputting this seed and adjusting the text prompt or parameters, users can make controlled tweaks while preserving the overall look of the image.

References

- getimg.ai: Guide to using seed in Stable Diffusion.

- onceuponanalgorithm.org: Guide: What Is A Stable Diffusion Seed and How To Use It.

- novita.ai: Stable Diffusion Seed: The Ultimate Guide.

- aipromptsdirectory.com: How to Use Seeds for Better Control in Stable Diffusion.

Other Interesting Articles

- Stable Diffusion: How to Install it on Mac M1 with Invoke AI: Explore ways to install, optimize and use Stable Diffusion on Mac. Learn from user experiences, issues, and optimization methods for…

- NovelAI Image Generator Pricing: Unlock NovelAI Image Generator with artistic potential, getting insights on understanding image generation, pricing plans, trial options…

As seen in the image in step 1, the filter is set by default to Lexica Aperture V3.5 (which could be a different version if you are reading this long after this article was published). As shown in the image above, Switch to select the Stable Diffusion 1.5 in the search filter.

As seen in the image in step 1, the filter is set by default to Lexica Aperture V3.5 (which could be a different version if you are reading this long after this article was published). As shown in the image above, Switch to select the Stable Diffusion 1.5 in the search filter. Search for an AI-generated image, by typing a description for the desired image and hitting the search button, as shown in the image above.

Search for an AI-generated image, by typing a description for the desired image and hitting the search button, as shown in the image above. Once the images show up, click on any of the results to see the image’s Seed, as shown in the image above.

Once the images show up, click on any of the results to see the image’s Seed, as shown in the image above.