AnimateDiff Tutorial

Introduction

In today’s fast-paced digital landscape, video creation has become a compelling force, captivating audiences, evoking emotions, and fostering engagement in ways previously unmatched. Despite the allure of crafting compelling videos, the perceived complexity often leaves innovative ideas untapped.

Enter AnimateDiff, an AI-driven marvel designed to transform text into stunning videos with just a click effortlessly. This guide offers an in-depth exploration of using this AI tool, which surpasses the capabilities of what many other AI tools out there propose.

Animate with AnimateDiff

What is AnimateDiff?

AnimateDiff is your digital sorcerer, weaving enchanting tales through the art of video creation. It’s tailored to understand user descriptions, generating video content by tapping into a built-in repository of video styles, music, and animations.

Unlike traditional language models, this AI tool turns words into a visual spectacle, giving users control over the video’s theme, soundtrack, and narration style. This powerful tool combines a stable diffusion AI model and a motion adapter, producing awe-inspiring video outputs.

Additional features include automated animation styles, voice-over options, customizable music, diverse background choices, and rapid video rendering.

Getting Started with AI AnimateDiff

Now that you’re intrigued, let’s explore the steps to kickstart your creative journey.

Signup for an Account

- Visit the the HuggingFace Project or the Replicate.

- Click on the “Sign up” button.

- Enter your email, choose a strong password, and agree to the terms.

- Click “Create” and verify your account through the email link.

Navigating the AnimateDiff Interface

Once logged into any of the above-mentioned platforms, you’ll find a variety of tools at your disposal.

Interface Overview

- Main Workspace: Includes a Text Input box, Preview window, Toolbar, and side panel.

- Customization Options: Explore animation styles, music, backgrounds, voice-over options, and visual elements.

Crafting Your First AnimateDiff Video

Step-by-Step Guide

Let’s walk through the steps to bring your creative vision to life. For this tutorial, I will be using the Replicate version of AnimateDiff to create an animation.

Time Needed : 00 hours 30 minutes

In this tutorial, we’ll guide you through the process of using AnimateDiff to create your own animated content, in less than 30 minutes. Let’s get started!

Step 1: Access AnimateDiff

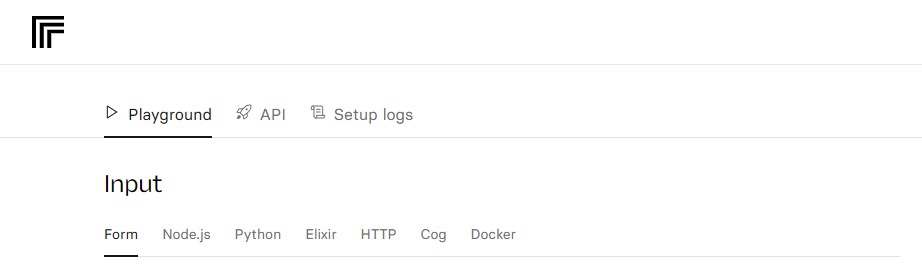

Go the the Replicate version of the project as discussed above. When there, you should see an interface as shown below:

Step 2: Inputting Main Workspace Parameters

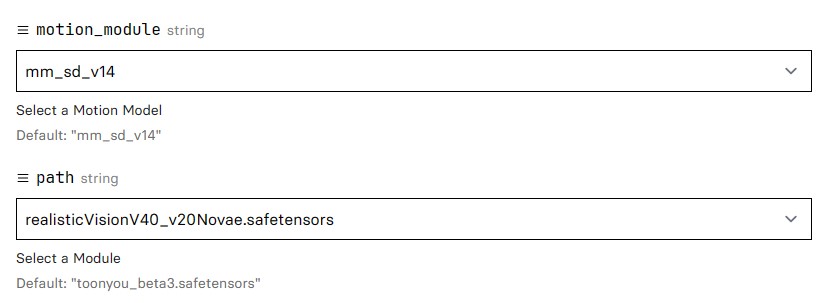

Input the required parameter within their appropriate text fields. The required parameters are as seen below:

–motion_module: The motion_module, refers to a component or feature within the animatediff website that handles the animation and movement aspects of the generated images. The input type required here is a string (series of characters).-path: The path, refers to the trajectory or course followed by an animated element within the generated images. It involves the movement or transition of objects, text, or other visual elements over a series of frames or steps. The input type required here is a string (series of characters).-prompt: The prompt, is a user-provided input that serves as the basis for generating the content of the animated images. You may input text, ideas, or instructions, and the animatediff system utilizes this prompt to create visually engaging animations. The input type required here is a string (series of characters).-n_prompt: The n_prompt, refers to an extended or refined version of the prompt. It involves additional specifications or details provided by the user to guide the generation of the animated content. Additional specifications here involve what the system should not generate. The input type required here is a string (series of characters).-steps: The steps, represent the discrete stages or frames through which the generation process evolves. Each step contributes to the progression and refinement of the animated image. The input type required here is an integer (series of numbers).-guidance_scale: The guidance_scale, denotes a parameter or setting that influences the level of direction or control applied to the AnimateDiff generation process. It determines how closely the generated content adheres to the user’s input. The input type required here is a floating point (series of decimal or fractional numbers).-seed: The seed, is a starting point or initial configuration that influences the randomness or variability in the generated images. You might use seeds to achieve specific outcomes or styles in the animation. The input type required here is an integer (series of numbers).

–motion_module: The motion_module, refers to a component or feature within the animatediff website that handles the animation and movement aspects of the generated images. The input type required here is a string (series of characters).-path: The path, refers to the trajectory or course followed by an animated element within the generated images. It involves the movement or transition of objects, text, or other visual elements over a series of frames or steps. The input type required here is a string (series of characters).-prompt: The prompt, is a user-provided input that serves as the basis for generating the content of the animated images. You may input text, ideas, or instructions, and the animatediff system utilizes this prompt to create visually engaging animations. The input type required here is a string (series of characters).-n_prompt: The n_prompt, refers to an extended or refined version of the prompt. It involves additional specifications or details provided by the user to guide the generation of the animated content. Additional specifications here involve what the system should not generate. The input type required here is a string (series of characters).-steps: The steps, represent the discrete stages or frames through which the generation process evolves. Each step contributes to the progression and refinement of the animated image. The input type required here is an integer (series of numbers).-guidance_scale: The guidance_scale, denotes a parameter or setting that influences the level of direction or control applied to the AnimateDiff generation process. It determines how closely the generated content adheres to the user’s input. The input type required here is a floating point (series of decimal or fractional numbers).-seed: The seed, is a starting point or initial configuration that influences the randomness or variability in the generated images. You might use seeds to achieve specific outcomes or styles in the animation. The input type required here is an integer (series of numbers).Step 3: Inputting a Text Description for Your Video

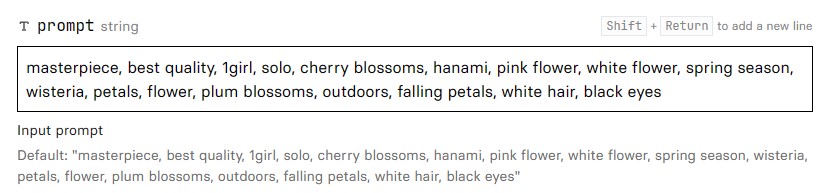

Ok, after glancing through your AnimateDiff interface and how it works, we can now proceed to input the required data for each field and generate our video. First, think of what you want to generate then type your idea into the prompt text field.

For me, I use the following prompt: “masterpiece, best quality, 1girl, solo, cherry blossoms, hanami, pink flower, white flower, spring season, wisteria, petals, flower, plum blossoms, outdoors, falling petals, white hair, black eyes” as shown above.

For me, I use the following prompt: “masterpiece, best quality, 1girl, solo, cherry blossoms, hanami, pink flower, white flower, spring season, wisteria, petals, flower, plum blossoms, outdoors, falling petals, white hair, black eyes” as shown above.Step 4: Tweaking Visual Effects

After entering your prompt, for you to get the most out of your video, enter all the other extra parameters required.

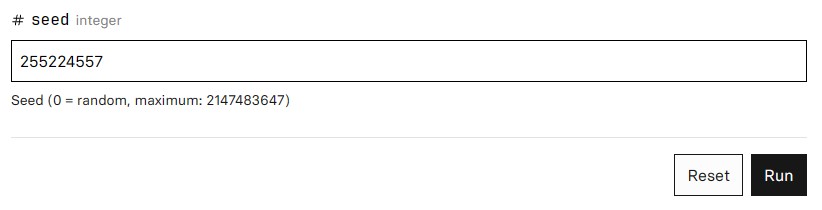

For me, I used the following parameters, as shown in the images above:-motion_module: mm_sd_v15_v2-path: realisticVisionV40_v20Novae.safetensors-prompt: masterpiece, best quality, 1girl, solo, cherry blossoms, hanami, pink flower, white flower, spring season, wisteria, petals, flower, plum blossoms, outdoors, falling petals, white hair, black eyes-n_prompt: badhandv4, easynegative, ng_deepnegative_v1_75t, verybadimagenegative_v1.3, bad-artist, bad_prompt_version2-neg, teeth, worst quality, low quality, nsfw, logo-steps: 25-guidance_scale: 7.5-seed: 255224557

For me, I used the following parameters, as shown in the images above:-motion_module: mm_sd_v15_v2-path: realisticVisionV40_v20Novae.safetensors-prompt: masterpiece, best quality, 1girl, solo, cherry blossoms, hanami, pink flower, white flower, spring season, wisteria, petals, flower, plum blossoms, outdoors, falling petals, white hair, black eyes-n_prompt: badhandv4, easynegative, ng_deepnegative_v1_75t, verybadimagenegative_v1.3, bad-artist, bad_prompt_version2-neg, teeth, worst quality, low quality, nsfw, logo-steps: 25-guidance_scale: 7.5-seed: 255224557Step 5: Previewing the Video

Hit the Run button, at the bottom right of the left pane to assess the video’s alignment with your intent. The button is as shown in the image below:

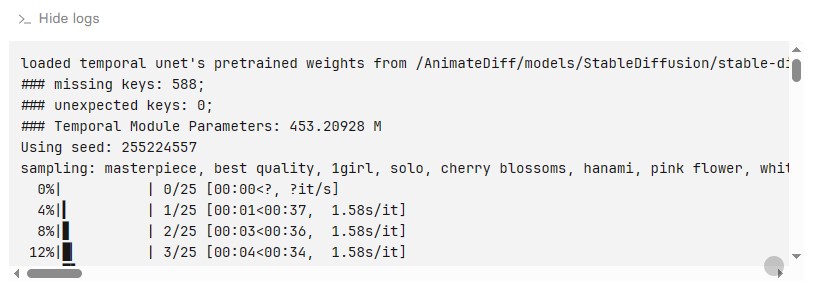

After clicking the “Run” button watch the terminal on the right pane, giving you extra information about your generation process, as shown below:

After clicking the “Run” button watch the terminal on the right pane, giving you extra information about your generation process, as shown below: Repeat the process, tweaking and re-arranging your prompt and parameters, until you get the desired output.NOTE: As of the time of writing this article, you have just 5 tries to generate your video, before the website blocks the generation option and asks for payment to continue.

Repeat the process, tweaking and re-arranging your prompt and parameters, until you get the desired output.NOTE: As of the time of writing this article, you have just 5 tries to generate your video, before the website blocks the generation option and asks for payment to continue.Step 6: Exporting the Video

Once satisfied, share or export the video as shown in the image below:

The combined result of all of the above procedures is as follows:

How to Animate with AnimateDiff

Voila! In just a few minutes, you’ve turned your imagination into reality with AnimateDiff.

Real-World Applications of AnimateDiff

If you’re still contemplating the possibilities, consider this example to kick-start your animated AI video creation.

Example: Enhancing Blog Posts

Imagine you’re a writer who usually relies on catchy headings. Now, complement your content with an animated video that conveys your blog post’s essence. This powerful combination of written and visual content can revolutionize your online presence, just as I have done for this article.

Conclusion

AnimateDiff brings a paradigm shift in video creation, offering users transformative benefits. By seamlessly integrating artificial intelligence, this tool streamlines content production, enhancing creativity, and turning static concepts into dynamic, engaging visuals.

I encourage you to experience this AI tool firsthand; its user-friendly interface and potent capabilities cater to both beginners and seasoned creators alike. Unleash your creativity with this AI tool – where innovation meets simplicity, and ideas come to life in a visual symphony!

Some Frequently Asked Questions and Their Answers

What is AnimateDiff, and how does it work?

AnimateDiff is a practical framework for animating personalized Text-to-Image (T2I) models without requiring specific tuning.

Are there any specific settings or advanced features in AnimateDiff?

Yes, AnimateDiff provides advanced settings, including Motion LoRA for enhanced movement and ControlNet for stylizing videos.

Can I find user guides and workflows for AnimateDiff?

Certainly! Users have created guides and workflows, such as the ComfyUI AnimateDiff Guide/Workflows, providing valuable insights and setups.

Can I use AnimateDiff with existing personalized text-to-image models?

Yes, AnimateDiff offers a practical framework to animate most existing personalized text-to-image models without the need for specific tuning, simplifying the animation process.

References

- stable-diffusion-art.com: AnimateDiff Easy text-to-video.

- arxiv.org: Animate Your Personalized Text-to-Image Diffusion Models.

- openreview.net: AnimateDiff: Animate Your Personalized Text-to-Image.

- reddit.com: [GUIDE] ComfyUI AnimateDiff Guide/Workflows Including.

Other Interesting Articles

- How to Use Skolar AI For Research: Elevate your research game with Skolar AI free version! Unleash AI-driven precision, tailored recommendations, and seamless exports for…

- OpenCV Image Manipulation: This tutorial explores fundamental OpenCV operations, empowering you to understand image formulation, access pixel values, and display images…

–motion_module: The motion_module, refers to a component or feature within the animatediff website that handles the animation and movement aspects of the generated images. The input type required here is a string (series of characters).-path: The path, refers to the trajectory or course followed by an animated element within the generated images. It involves the movement or transition of objects, text, or other visual elements over a series of frames or steps. The input type required here is a string (series of characters).-prompt: The prompt, is a user-provided input that serves as the basis for generating the content of the animated images. You may input text, ideas, or instructions, and the animatediff system utilizes this prompt to create visually engaging animations. The input type required here is a string (series of characters).-n_prompt: The n_prompt, refers to an extended or refined version of the prompt. It involves additional specifications or details provided by the user to guide the generation of the animated content. Additional specifications here involve what the system should not generate. The input type required here is a string (series of characters).-steps: The steps, represent the discrete stages or frames through which the generation process evolves. Each step contributes to the progression and refinement of the animated image. The input type required here is an integer (series of numbers).-guidance_scale: The guidance_scale, denotes a parameter or setting that influences the level of direction or control applied to the AnimateDiff generation process. It determines how closely the generated content adheres to the user’s input. The input type required here is a floating point (series of decimal or fractional numbers).-seed: The seed, is a starting point or initial configuration that influences the randomness or variability in the generated images. You might use seeds to achieve specific outcomes or styles in the animation. The input type required here is an integer (series of numbers).

–motion_module: The motion_module, refers to a component or feature within the animatediff website that handles the animation and movement aspects of the generated images. The input type required here is a string (series of characters).-path: The path, refers to the trajectory or course followed by an animated element within the generated images. It involves the movement or transition of objects, text, or other visual elements over a series of frames or steps. The input type required here is a string (series of characters).-prompt: The prompt, is a user-provided input that serves as the basis for generating the content of the animated images. You may input text, ideas, or instructions, and the animatediff system utilizes this prompt to create visually engaging animations. The input type required here is a string (series of characters).-n_prompt: The n_prompt, refers to an extended or refined version of the prompt. It involves additional specifications or details provided by the user to guide the generation of the animated content. Additional specifications here involve what the system should not generate. The input type required here is a string (series of characters).-steps: The steps, represent the discrete stages or frames through which the generation process evolves. Each step contributes to the progression and refinement of the animated image. The input type required here is an integer (series of numbers).-guidance_scale: The guidance_scale, denotes a parameter or setting that influences the level of direction or control applied to the AnimateDiff generation process. It determines how closely the generated content adheres to the user’s input. The input type required here is a floating point (series of decimal or fractional numbers).-seed: The seed, is a starting point or initial configuration that influences the randomness or variability in the generated images. You might use seeds to achieve specific outcomes or styles in the animation. The input type required here is an integer (series of numbers). For me, I use the following prompt: “masterpiece, best quality, 1girl, solo, cherry blossoms, hanami, pink flower, white flower, spring season, wisteria, petals, flower, plum blossoms, outdoors, falling petals, white hair, black eyes” as shown above.

For me, I use the following prompt: “masterpiece, best quality, 1girl, solo, cherry blossoms, hanami, pink flower, white flower, spring season, wisteria, petals, flower, plum blossoms, outdoors, falling petals, white hair, black eyes” as shown above.

For me, I used the following parameters, as shown in the images above:-motion_module: mm_sd_v15_v2-path: realisticVisionV40_v20Novae.safetensors-prompt: masterpiece, best quality, 1girl, solo, cherry blossoms, hanami, pink flower, white flower, spring season, wisteria, petals, flower, plum blossoms, outdoors, falling petals, white hair, black eyes-n_prompt: badhandv4, easynegative, ng_deepnegative_v1_75t, verybadimagenegative_v1.3, bad-artist, bad_prompt_version2-neg, teeth, worst quality, low quality, nsfw, logo-steps: 25-guidance_scale: 7.5-seed: 255224557

For me, I used the following parameters, as shown in the images above:-motion_module: mm_sd_v15_v2-path: realisticVisionV40_v20Novae.safetensors-prompt: masterpiece, best quality, 1girl, solo, cherry blossoms, hanami, pink flower, white flower, spring season, wisteria, petals, flower, plum blossoms, outdoors, falling petals, white hair, black eyes-n_prompt: badhandv4, easynegative, ng_deepnegative_v1_75t, verybadimagenegative_v1.3, bad-artist, bad_prompt_version2-neg, teeth, worst quality, low quality, nsfw, logo-steps: 25-guidance_scale: 7.5-seed: 255224557 After clicking the “Run” button watch the terminal on the right pane, giving you extra information about your generation process, as shown below:

After clicking the “Run” button watch the terminal on the right pane, giving you extra information about your generation process, as shown below: Repeat the process, tweaking and re-arranging your prompt and parameters, until you get the desired output.NOTE: As of the time of writing this article, you have just 5 tries to generate your video, before the website blocks the generation option and asks for payment to continue.

Repeat the process, tweaking and re-arranging your prompt and parameters, until you get the desired output.NOTE: As of the time of writing this article, you have just 5 tries to generate your video, before the website blocks the generation option and asks for payment to continue.